Detecting AI "plagiarism" and other wild tales

|

| If only it weren't for those darned kids! |

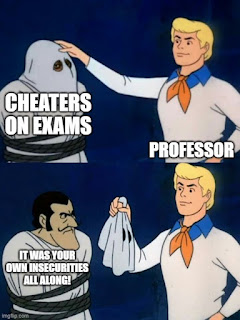

Admittedly I haven't been blogging a lot these days. I keep meaning to come back and actually get into the habit of writing more frequently, but as one of my Twitter acquaintances once observed, you make a note of it to come back to, but then lose motivation (loosely paraphrasing Matt Crosslin - I think). In any case, à propos of TurnItIn's announcement this past week that they will now have an AI writing detector and AI writing resource center for educators as part of their offerings (wooooo! /s), I thought I'd spend a few minutes jotting down some thoughts. Warning: I am a bit of a Dr. Crankypants on this one...

If you haven't been paying attention, the early research is out on these kinds of detector schemes. People have been playing around with ChatGPT and AI author detectors and the results are in. These detectors just aren't good. Even GPTZero "The World's #1 AI Detector" (🙄) isn't all that accurate. Change some words around, paraphrase, change a bit of the syntactic structure, and boom! Something that was flagged as likely to be AI-generated is no longer marked as AI-generated. Furthermore, texts that aren't AI-generated, are falsely flagged as AI text for...reasons. Who knows how the system works? 🤔🤷♂️.

All this really rubs me the wrong way, as an instructional designer, as an EdTech person, and as someone who works in higher ed. For reasons that I won't go into too much detail, my work requires students to submit their capstone examinations through TII. The capstone examination is a culminating analytical essay (with citations and appropriate argumentation) that is undertaken once students have completed their MA coursework. You must pass this exam to earn your MA. As my department's EdTech Guru Extraordinaire, I administer this exam twice a year, so I get to set everything up in the LMS.

The capstone exam is a stressful time in the lives of students because even though many complete their courses with flying colors, they cannot graduate until the capstone essay is deemed acceptable (based on the assessment rubric that is shared with learners ahead of time). Students have two chances to pass, or they never receive a degree. Even if you are confident in your knowledge (like I was back in 2010 when I took the exam), you still feel the jitters and nervousness. My hands were shaking going into the computer lab. Back then you had four hours to answer four essay-style questions, so restroom breaks were also on a real "must go!!!" basis. Questions such as What if I don't pass? What if I fail twice? Two (or more) years wasted swirl through your mind. Luckily the exam today is a bit different than my days, so students have more time, open books, and more opportunities to write something broader with more authentic prompts. Still, those questions about "what if I don't pass?" remain. TII, the run-of-the-mill, non-AI-enhanced variety, still provides false positives. it frikkin' flags the name of the university because it...(wait for it) appears in other students' submitted papers in the institutional repository! 🤦♂️

Now, as the exam gatekeeper, I view these results before I anonymize the exams for grading. I have to make a call as to whether someone plagiarized, and I always come to the conclusion that TII is full of 💩. On the plus side, because the exam is longer than it was when I was a student, students have access to the report and they can modify/update their submission to get rid of any inadvertent plagiarism (i.e., they forgot to cite something), but some folks get stressed out at seeing a 20% plagiarism report and they've cited everything properly. I'd be stressed out too if I only had two chances at an exam that determined if I would graduate or not and I got these reports! Now, add to it some black box "likelihood of AI writing this" where you don't know how the mechanism works, and you have no idea how to evaluate such an output. Even though my default stance is to trust the student, it still takes energy to reassure students when they see this kind of junk output...

It annoys me that such "tools" increase stress upon learners, and add to the workload of care that professionals have to add to their schedule to reassure students who are, rightfully, freaked out.

Comments